With the rise of Analytics and Big Data and our need to collect, process, and distribute ever-growing amounts of data from IoT, to mapping, and insights on the way consumers interact across apps and the internet, fast, efficient, and effective methods of analyzing our data are becoming more necessary. To date, platforms like Hadoop, Cloudera, Apache Pig, Dryad, SciDB, etc have served us in a fair capacity; however, the ability to process the vast amounts of data we collect in real time or while data is “in-motion” has left much to be desired. Surprisingly, the goal of much faster processing capability has already been achieved in many parallel fields. Engineers and scientists have already been utilizing Field Programmable Gate Arrays (FPGAs) for some time to work with Ultra-Wide Band (UWB) RF, vibration monitoring, and real-time safety applications. From here on out, we’re going to explore how we can apply this technology and lessons-learned from current applications to empower better and faster analytics.

Blurring the Lines Between Hardware and Software

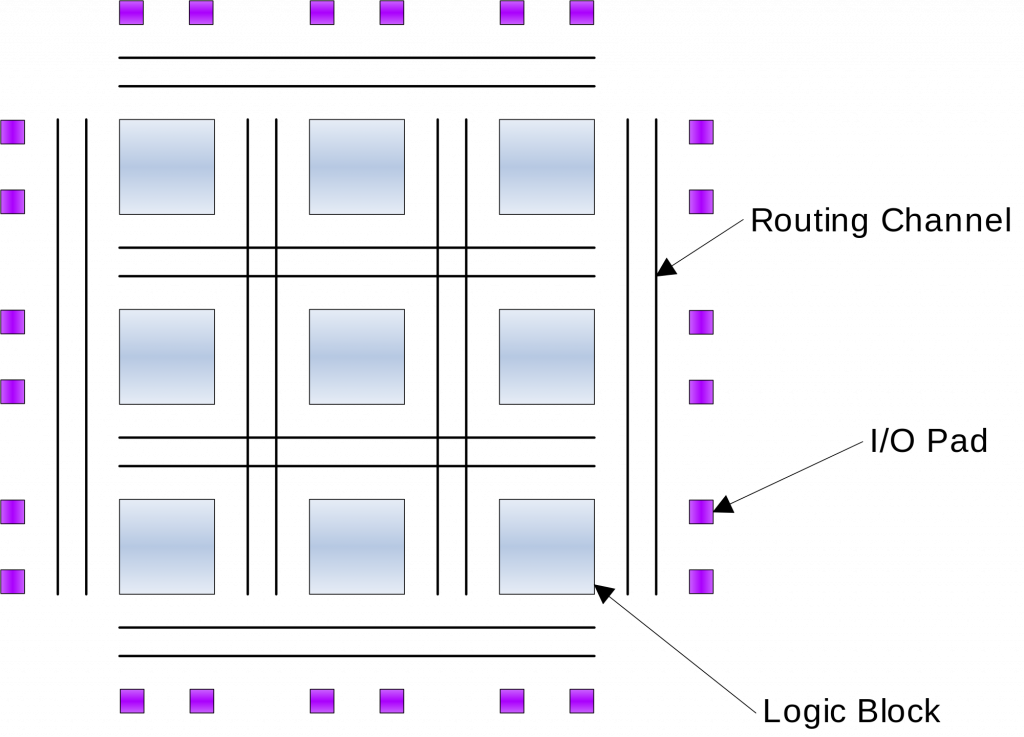

To understand how FPGAs are going to enable our analytics we first need to understand the technology itself. An FPGA, in short, is simply a reconfigurable block of silicon gates (Fig. 1) whose configuration is designed by a high-level language such as Verilog or VHDL that is then run through a compiler which optimizes for available silicon, specific clock speeds, and physical chip layout producing a portable bit-file that can be used for field upgrades and performance improvements. FPGAs enable the creation of very specific blocks of code that run extremely explicit functions that are translated into hardware. These functions are used in the H.264 decoding in many of our TVs, running the algorithms for High Speed Trading, handling PID functions in cars like Tesla, and monitoring high-speed processes in the F-35.

We are literally blurring the lines between software and hardware to the point where it begins to become difficult to understand where software begins and where software ends.

Figure 1. Generalized FPGA Diagram

By enabling developers to write software which is then translated into high-speed gate-level mapping running directly on silicon, we can achieve near ASIC (Application Specific Integrated Circuit) level performance. We are literally blurring the lines between software and hardware to the point where it begins to become difficult to understand where software begins and where software ends. Understanding and utilizing this fusion can help us achieve new heights of analytics performance and meet the demand today and in the future.

Optimizing for Computation and Real-Time Processing

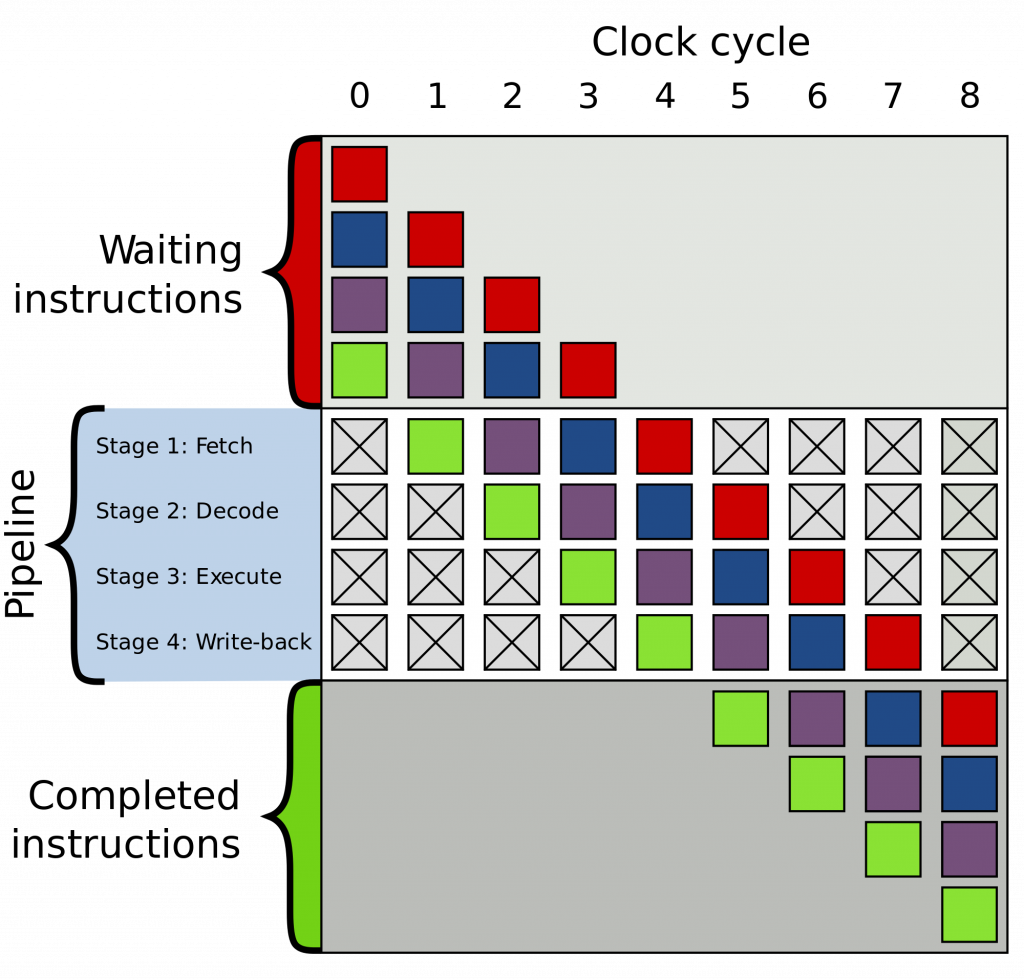

You may be wondering how this applies to speeding up analytics. To understand how we get there, we need to understand how a typical x86 architecture processes instructions. In general, Central Processing Units (CPUs) process assembler instructions line by line and in order and account for a very wide variety of scenarios and functions which is necessary for general purpose computing. Each instruction requires a few steps including fetching the instructions, routing the instructions, performing the proper operation, and writing/deleting from registers, memory, etc.

Let’s consider a hypothetical program with 100K assembly instructions running on a CPU with roughly five steps to process each instruction. Each instruction runs through each step before the CPU goes to pull a new instruction for processing. So instead of waiting for 100K clock cycles we’re potentially waiting for 500K cycles. Many CPU architectures have shortened the number of cycles required through a process called pipelining (Fig. 2) which enables instructions to be processed in a faster order rather than having to wait for each instruction to finish. While this improves processing time, CPUs are still built for general purpose computing and provides more overhead than is necessary to perform only specific functions.

Figure 2. Generalized CPU Pipeline Architecture

We can address the inherent overhead on a CPU with FPGAs by writing specific blocks of code to address computational procedures that only perform the necessary functions. This not only removes the roadblocks in a CPU, but because an FPGA is a run-time configurable array of silicon gates, we can create new or replicate functions that perform either the same or different computations and are truly parallel. This, as opposed to multi-tasking that a typical CPU and OS perform, can bring orders of magnitude of speed to our computations.

All of this is to simply say, we can hand-pick some of the most computationally heavy functions in our analytics calculations to offload them to an optimized platform to save on cycles. If we can reduce the critical components of our computation time by offloading these functions to FPGAs and true parallelism, and do it over billions (or trillions) of computations, we can save immense amounts of time and in many cases, bring computation down to what can be near real-time for processing or literally processing data-in-motion.

If we can address computational challenges in thoughtful and innovative ways, we can move the needle in analytics in a significant and revolutionary manner.

Challenges to Hardware-Accelerated Analytics

We cannot talk about all the great things FPGAs can do for us without acknowledging some of the more obvious drawbacks. One of the most obvious issues that arises is that we’re all moving to the cloud, right? Unfortunately, you can’t move to the cloud with an FPGA. The downside here is that FPGAs are hardware and the platforms they exist on must be hosted or managed.

FPGAs are also complex devices and require skilled engineers and data-scientists to get just right and take full advantage of these systems. There is no lack of complexity and nuance to the art of data-science and analytics. Knowing which algorithms to accelerate and at what time is no simple task, but with the right engineers we can achieve great efficiencies and performance improvements in our systems.

Open Platforms for Hardware-Accelerated Analytics

While the ability to consume large amounts of data and improve our processing time is awesome in and of itself, accessibility of these platforms is just as critical. Being able to not only load your data onto these platforms, but access the results is a key function of well-rounded analytics and data platforms. The question is, how do we take such a complex architecture and bring it to the masses?

In short, the simple answer is APIs. By wrapping hardware accelerated analytics platforms in APIs and creating standard interfaces for consumption and interaction, we can open these platforms for easier use. Creating common interfaces to these platforms enables a variety of systems, partners, third-parties, and other consumers to interact with complex platforms in an abstract and simplified manner. We can abstract the complexity to make this powerful technology available to anyone with data and do it safely, securely, and in a well-known way.

Final Thoughts

While it is exciting to go to the cloud and be enabled to scale in a seemingly unlimited way, it makes one wonder if we’re forgetting about the platforms and technology that drive this capability. I do not pretend to have a great answer to how to scale FPGA technology; however, I believe there is a need to focus on finding a way to make this powerful capability generally available to compute clusters and analytics platforms whether they be on premise or cloud based. FPGAs can enable better analytics by processing data in-motion, operate with near real-time processing, and provide true parallel operations.

Perhaps the answer is in general appliances that enable very commonly used functions and computations that can be used to automatically off-load these cycle-intensive operations or perhaps the answer is in an experience where our FPGA platforms are reconfigured at design-time to optimize for our needs. The short answer to this is that there is no one great answer; however, if we can address computational challenges in thoughtful and innovative ways, we can move the needle in analytics in a significant and revolutionary manner. My greatest hope is that we can unlock new potential and breakthroughs in areas like cancer research, AI, life-sciences, and in places where we struggle to find meaning and correlation in data-sets or where our computations are so time-consuming and costs very expensive that progress is painfully slow or impossible.